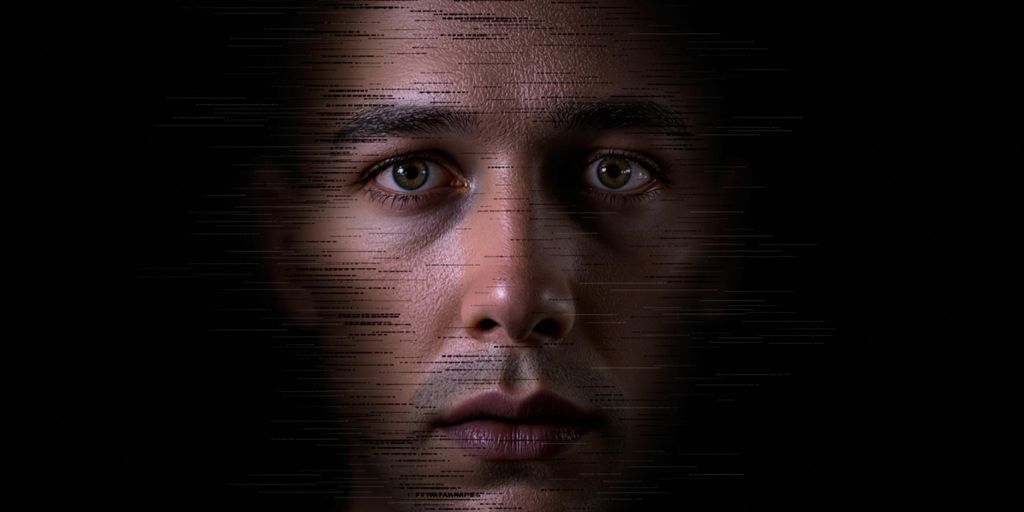

Deepfake Dangers—Why AI in Media Needs Regulation

Deepfake AI has been making waves in the media world, and not always in a good way. This tech can create fake videos and audio that look and sound real, which is both fascinating and scary. While it has some cool uses, like in movies, it also raises big questions about trust, privacy, and even politics. Let’s break down why we need rules to keep this technology in check.

Key Takeaways

- Deepfake AI can create fake but realistic videos and audio, causing trust issues.

- This technology has been used for harmful purposes like spreading fake news.

- There are ethical concerns, especially around privacy and manipulation.

- Current laws aren’t enough to deal with the problems deepfakes cause.

- New rules and tech tools are needed to spot and control deepfakes.

Understanding the Rise of Deepfake AI

How Deepfake Technology Works

Deepfake technology uses artificial intelligence, specifically deep learning and neural networks, to create highly realistic fake media. It works by analyzing and mimicking patterns in existing data, such as a person’s voice or facial expressions, to generate synthetic versions that appear authentic. The result is content so convincing that it can easily deceive the average viewer.

Key steps in how it works:

- Collecting a large dataset of the target’s images, videos, or audio.

- Training an AI model to learn the unique features of the target.

- Generating new media by blending the learned patterns with fresh inputs.

The process is complex but, thanks to advancements in computing power, it’s becoming more accessible to non-experts.

The History of Deepfake Development

Deepfake technology started gaining traction in the early 2010s, fueled by the rise of machine learning and improved algorithms. Initially, it was a niche experiment in academic and tech circles. However, by 2017, the term "deepfake" became popularized when online communities began sharing manipulated videos, often for entertainment or malicious purposes.

Some milestones in its development:

- 2014: The introduction of Generative Adversarial Networks (GANs), a key technology behind deepfakes.

- 2017: The release of publicly available deepfake software on forums.

- 2020s: Mainstream attention as deepfakes infiltrated politics, entertainment, and scams.

What started as a technological curiosity quickly evolved into a global concern as its misuse became apparent.

Key Players in the Deepfake Industry

The deepfake industry is a mix of legitimate developers and bad actors. On one end, companies and researchers are exploring ethical applications, like dubbing movies or preserving historical figures. On the other, malicious users exploit the technology for scams, misinformation, and exploitation.

Some notable players include:

- Tech giants like Adobe and NVIDIA, which are developing AI tools for creative purposes.

- Independent developers who release open-source deepfake software.

- Cybercriminals leveraging deepfakes for phishing scams, identity theft, and fraud.

With the rise of deepfake scams, it’s clear that the line between innovation and misuse is razor-thin. The technology itself isn’t inherently bad—it’s how it’s used that determines its impact.

For more context on the dangers of deepfake scams, check out Deepfake scams pose a significant threat.

The Ethical Implications of Deepfake AI

Manipulation of Public Opinion

Deepfake technology has the power to distort public opinion in ways we’ve never seen before. Imagine a fake video of a political leader announcing a controversial decision—it could spark chaos before anyone realizes it’s fake. This ability to fabricate convincing falsehoods undermines trust in media and public figures. In a world already struggling with misinformation, deepfakes throw gasoline on the fire.

Here’s how deepfakes can manipulate:

- Fake speeches or announcements from public officials.

- Fabricated videos that sway voter sentiment during elections.

- False "evidence" used to discredit or frame individuals.

Impact on Personal Privacy

Deepfakes don’t just target public figures—they can destroy the lives of everyday people, too. Imagine someone using your face in a compromising video. Even if proven fake, the damage to your reputation can be irreversible. Victims often face harassment, job loss, or even legal troubles because of these fakes.

Quick Facts:

| Privacy Concern | Example Scenario |

|---|---|

| Non-consensual content | Fake intimate videos of individuals |

| Identity theft | Using faces in fraudulent activities |

| Harassment | Targeting individuals with fake media |

Challenges in Identifying Deepfakes

Spotting a deepfake isn’t as easy as it sounds. The technology keeps improving, making it harder to tell what’s real and what’s fake. Even experts sometimes struggle to identify them. This creates a huge problem for law enforcement, journalists, and even everyday users trying to verify information.

Some challenges include:

- The rapid advancement of AI tools making detection harder.

- Lack of public awareness about spotting deepfakes.

- Limited access to reliable detection tools for the average person.

The ethical dilemmas surrounding deepfakes highlight the urgent need for robust AI legislation to protect individuals and society as a whole. Without it, trust in what we see and hear will continue to erode.

Real-World Examples of Deepfake AI Misuse

Political Propaganda and Fake News

Deepfakes have become a dangerous tool in the political arena. By creating fake videos of politicians delivering inflammatory speeches or endorsing controversial policies, bad actors can sow confusion and distrust. This manipulation can escalate tensions and even influence election outcomes. For example, a fabricated video showing a political leader admitting to corruption could go viral before it’s debunked, leaving lasting damage. The challenge is that by the time the truth emerges, the public's perception might already be skewed.

Celebrity Deepfakes and Exploitation

Celebrities are frequent targets of deepfake abuse, often in disturbing ways. Fraudulent, sexualized videos and images of well-known female stars and athletes have surfaced online, tarnishing reputations and invading privacy. Platforms like Meta have started addressing this issue, especially after investigations like one by CBS News revealed the widespread nature of such content. Meta's action to remove these harmful materials highlights the urgent need for better oversight and technology to combat such violations.

Corporate Espionage and Fraud

Deepfake technology isn’t just limited to political or personal misuse—it’s also infiltrating the corporate world. Scammers are using AI-generated voices to impersonate CEOs or executives, tricking employees into transferring funds or sharing confidential information. A notable case involved a deepfake audio call that led to a fraudulent transfer of hundreds of thousands of dollars. These incidents underscore the growing risks businesses face in an era where seeing—or hearing—is no longer believing.

The misuse of deepfake AI is a stark reminder of how technology, while innovative, can also be weaponized. Addressing these issues requires a mix of public awareness, corporate responsibility, and stronger regulations.

The Role of Regulation in Controlling Deepfake AI

Current Laws Addressing Deepfake Technology

Right now, laws around deepfake tech are kind of all over the place. Some countries have started to take action, but it’s still pretty patchy. In the U.S., for example, states like California and Virginia have laws targeting deepfake misuse, especially when it comes to elections or explicit content. But there’s no federal law yet, which leaves a lot of gaps. On the other hand, places like the European Union are trying to tackle the issue more broadly with their AI Act.

In New Jersey, legislation like A 2364 is a step forward. It specifically addresses deepfake pornography and sets up penalties for sharing such media without consent. They’re even planning a Deep Fake Technology Unit to deal with these problems. This kind of focused approach could be a blueprint for others.

Proposed Policies for AI Governance

If we’re going to get serious about deepfake regulation, governments need to think bigger. Here are a few ideas that could work:

- Mandatory labeling: Any media created using AI should come with a clear label so people know it’s not real.

- Stronger penalties: Make the consequences harsher for people who misuse deepfakes, especially for fraud or harassment.

- AI auditing: Companies developing these technologies should undergo regular audits to make sure they’re not enabling harmful uses.

These policies won’t solve everything, but they’d be a good start. The key is balancing innovation with accountability.

International Collaboration on AI Regulation

Deepfakes don’t stop at borders, so why should the rules? Countries need to work together on this. Imagine if we had a global agreement on AI ethics, kind of like the Paris Agreement for climate change. It won’t be easy, but it’s worth trying.

Some international groups are already talking about this, but progress is slow. The challenge is getting everyone on the same page when priorities differ so much. Still, global collaboration is probably the only way to really tackle the deepfake problem at scale.

It’s not just about making rules; it’s about keeping up with tech that changes faster than the laws can. That’s the real challenge here.

Technological Solutions to Combat Deepfake AI

AI Tools for Deepfake Detection

AI-powered detection tools are one of the first lines of defense against deepfakes. These systems analyze videos and images for inconsistencies, like unnatural blinking or mismatched lighting. These tools are evolving fast to keep up with increasingly convincing deepfakes. Some popular methods include:

- Examining pixel-level artifacts that reveal manipulation.

- Using audio analysis to detect mismatched lip-syncing.

- Training neural networks to recognize patterns unique to authentic media.

Blockchain for Media Authentication

Blockchain technology offers a promising way to verify the authenticity of digital content. By embedding metadata into the blockchain, creators can prove a video or image hasn’t been tampered with. For instance:

| Feature | Benefit |

|---|---|

| Immutable Records | Ensures data can’t be altered. |

| Transparent History | Tracks every edit or addition. |

| Decentralization | Reduces risk of single-point failure. |

The Role of Social Media Platforms

Social media platforms play a huge role in spreading or stopping deepfakes. Companies can:

- Implement stricter content moderation policies.

- Use AI to flag and remove suspicious uploads.

- Provide users with tools to report potential deepfakes.

It’s clear that combating deepfakes requires a mix of technology, policy, and public awareness. Platforms can’t do it alone, but they are a critical piece of the puzzle.

For example, DARPA’s initiatives are pushing the boundaries of what’s possible in detecting and preventing deepfake misuse. Their work is a reminder that collaboration between public and private sectors is essential.

The Future of Deepfake AI in Media

Potential Benefits of Deepfake Technology

Deepfake AI isn’t just about creating fake videos or manipulating voices—it’s also opening doors to some pretty interesting possibilities. For example, imagine being able to generate life-like video content for education or entertainment without needing a huge production team. This could mean more accessible, lower-cost media for everyone. It’s not all bad; there’s potential for innovation here.

Some potential benefits include:

- Streamlined content creation for movies, games, and advertisements.

- Enhanced accessibility, like dubbing videos into multiple languages instantly.

- Creative tools for artists and storytellers to experiment with new ideas.

Balancing Innovation and Responsibility

Of course, with great power comes great responsibility. While deepfake tech can do a lot of good, it’s also a double-edged sword. Developers and users need to think hard about how they’re using this stuff. Are they helping society, or are they just adding to the chaos? Balancing the innovation with ethical use is going to be a big challenge.

Predictions for AI-Driven Media

Looking ahead, deepfake AI is likely to become even more realistic and harder to spot. But that doesn’t mean it’s all doom and gloom. Here’s what might happen:

- Governments and tech companies could team up to set stricter rules.

- AI tools for detecting fakes will probably get a lot better.

- Media platforms might start flagging or labeling content that’s been AI-altered.

The future of deepfake AI isn’t set in stone. It’s up to all of us—creators, regulators, and everyday users—to shape how it gets used.

Wrapping It Up

At the end of the day, deepfakes are more than just a tech trend—they’re a real problem that’s not going away anytime soon. Sure, they can be fun or even useful in some cases, but the risks are just too big to ignore. From fake news to personal harm, the potential for misuse is huge. That’s why it’s time to take a hard look at how we regulate this stuff. It’s not about stopping progress; it’s about making sure it doesn’t hurt people along the way. If we don’t act now, we might end up in a world where we can’t trust anything we see or hear. And honestly, who wants that?

Frequently Asked Questions

What is deepfake technology?

Deepfake technology uses artificial intelligence to create fake videos or images that look real. It swaps faces or changes voices to make it seem like someone did or said something they didn’t.

Why are deepfakes considered dangerous?

Deepfakes can spread false information, harm people’s reputations, or trick others into believing something fake. They can also be used for scams and other illegal activities.

How can I tell if a video is a deepfake?

It can be tricky, but you can look for strange movements, mismatched lighting, or odd facial expressions. Some tools and apps are also designed to spot deepfakes.

Are there any laws against deepfakes?

Some places have laws to stop the misuse of deepfakes, like banning fake videos that harm others. However, many countries are still figuring out how to regulate them.

What can be done to stop deepfake misuse?

Using detection tools, educating people about deepfakes, and creating strict rules can help reduce their misuse. Social media platforms also play a big role in controlling fake content.

Can deepfakes be used for good purposes?

Yes, deepfakes can be helpful in movies, education, or creating fun content. The key is to use them responsibly and not to mislead or harm others.